We’re excited to introduce our new automatic optimization tool – Auto Optimize, developed in collaboration with the University of Portsmouth.

Auto Optimize uses machine learning algorithms to automatically deploy the best-performing content, triggers or experiences toward the goals you set. This helps online businesses to get the maximum ROI from their content or triggers within the Fresh Relevance system.

Why are we adding this enhancement?

Our Chief Technology Officer, David Henderson, explains:

“Typically our clients are short on time and resources, meaning traditional A/B tests and manual intervention are not an option. Through our work with the University of Portsmouth, our new functionality within the Fresh Relevance system makes it easier to run optimizations and gives better results. Clients create different pieces of content, add to our system, then let the machine learning do the hard work of making the decisions about which to show for maximum ROI.”

How to use Auto Optimize

Auto Optimize can be used to optimize three key areas of functionality:

- SmartBlocks and Slots, such as for individual image content like a hero banner: to let Auto Optimize allocate the most traffic to the top-performing banners.

- Trigger programs: to optimize best-performing email content or send times.

- Whole experiences, such as for setting up a few different versions of a flash sale: to let Auto Optimize work out the best-performing one and allocate the higher proportion of traffic.

Once you’ve decided what you want to optimize with Auto Optimize (content, triggers or whole experiences), it’s simple to set it up via our Optimize Center.

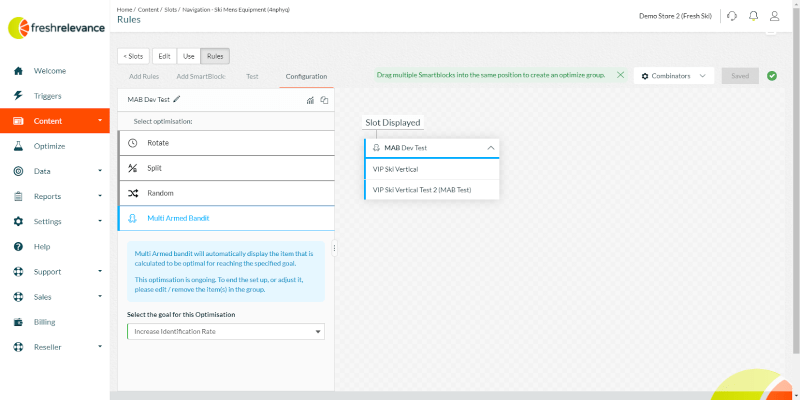

In our Marketing Rule Trees, you can drag content, triggers or experiences into the same location to let Auto Optimize determine which ones to show.

In addition to the existing configuration options of rotation, randomization and A/B test, you can now select Auto Optimize. Once selected, all you need to do is add the goal that the optimization works towards.

Currently, we have the following goals available:

- Increase conversion

- Increase average order value

- Increase identification

- Increase site visits

- Increase time on site

- Reduce web bounce rate

Once saved, it will appear in our Optimize Center, so it’s fast to adjust or to view the results.

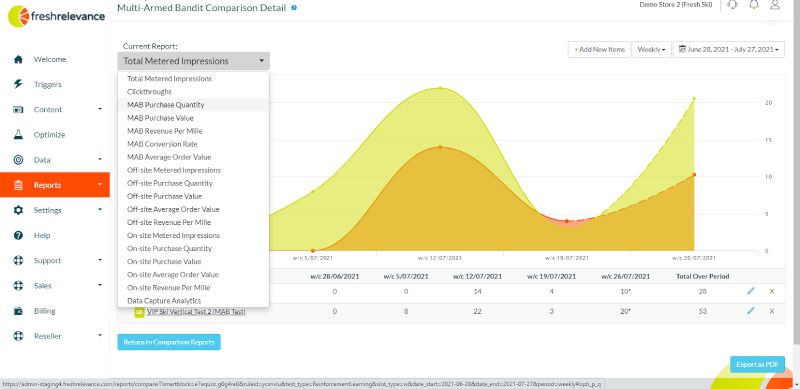

Auto Optimize then shows visitors the items within the optimization to achieve the specified goal. Reporting is available on multiple metrics, alongside our standard metrics, so you can clearly see what data Auto Optimize is working from and what it is doing on an ongoing basis.

Testing & Optimization

Book a demo to see Auto Optimize in action and learn more about the other features in our Advanced Testing & Optimization module.