Triggered emails like cart abandonment alerts are sure-fire ways to generate incremental revenue for your eCommerce website.

But introducing a basic triggered email program is just the start. By testing and optimizing campaigns on an ongoing basis as part of your email marketing strategy, you can fine-tune emails to increase revenue and adapt to customers’ behavior over time.

Here, we’ll cover three different split testing methods for real-time emails, when you should use them, and how to measure success.

Before you start…

Triggered email campaigns have so many elements, it’s tempting to test every possible variable. But if you randomly test emails to spot effects, there’s no guarantee that you’ll contribute to your company’s overall marketing campaigns’ objectives.

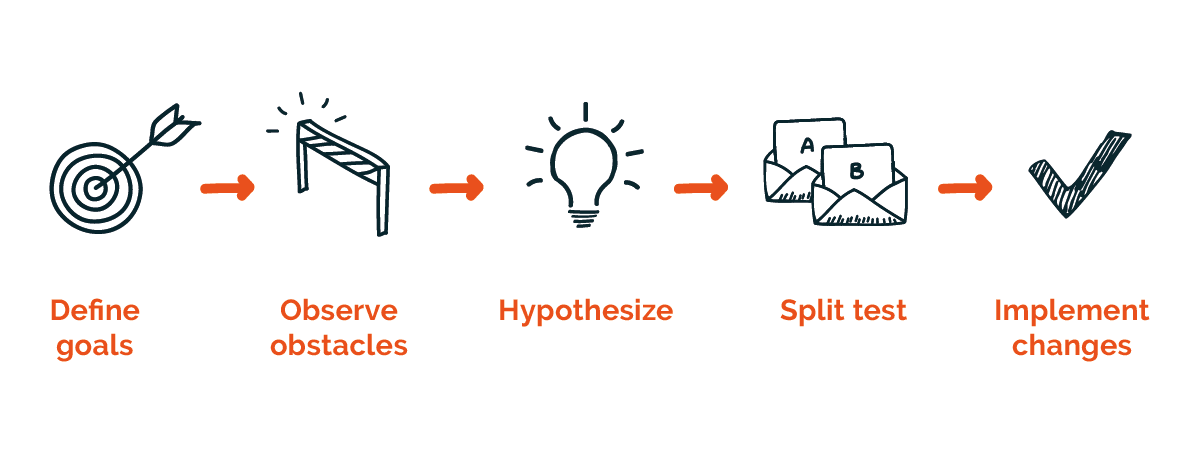

By taking a scientific approach to split testing, marketers can make a demonstrable impact on conversions and sales. Here’s how to get started:

- Define your high-level goals (e.g. to generate more revenue from emails)

- Observe the obstacles to achieving those goals.

- Hypothesize how best to overcome those obstacles.

- Split test and measure the results to see if your hypothesis holds true.

- Implement the change or revert to further testing.

Imagine that browse abandonment emails aren’t driving as much revenue as you’d hoped.

You might suspect that browsers need more confidence to make a purchase decision – so adding social proof messaging to browse abandonment emails will result in more conversions.

With this in mind, you can run an A/B test to prove or disprove the hypothesis. You can then use the results to shape future shopping recovery campaigns.

1. A/B testing automated emails

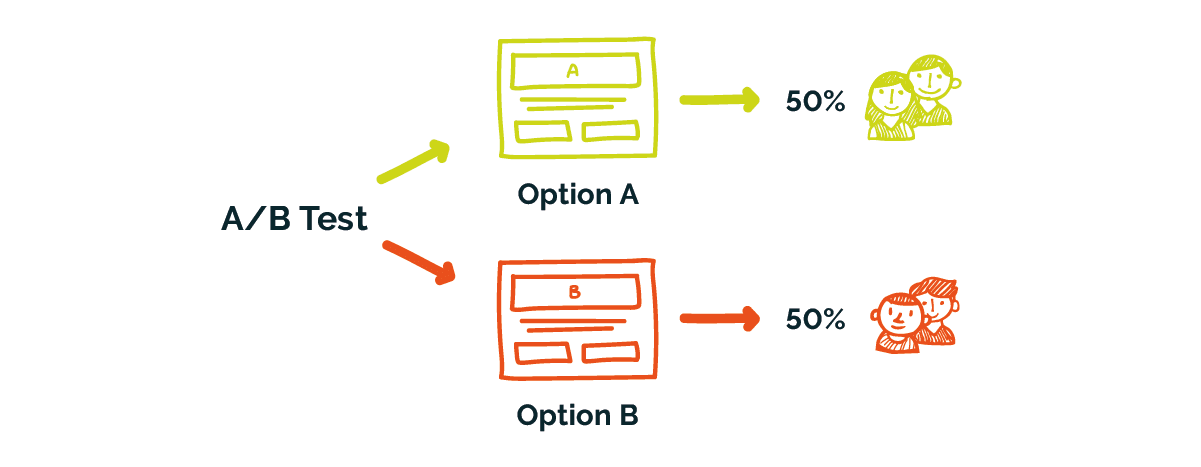

In an A/B test, you compare two variants of an email or email program against each other to decide which version is more effective.

How to use A/B tests

Use A/B tests when you want to optimize one aspect of a triggered email program. This works best when you have a strong hypothesis about a certain email element. For example “A personalized hero image will result in higher click throughs than a generic hero image.”

You’ll create two different versions, A and B, changing only the one variable you want to test. Then, you’ll split the versions between two equally sized audiences, and measure which variant performs better over time.

What factors should you test?

As with any other marketing campaigns, you can A/B test anything that impacts a customer’s actions. That includes:

- Subject line & preview text: If a customer isn’t tempted by the subject line, they may never see the rest of the email – so it’s important to get this right. You might test out a different tone of voice, or adding personalization options such as the name of the abandoned product within your subject lines and preview texts.

- Email copy: In cart or browse abandonment email campaigns, the emphasis should be on the abandoned product. But it’s important to include engaging supporting copy that tells customers why they received the email. You can alter the length and tone of voice, as well as adding personalization options like the customer’s first name.

- Banners and images: Imagery is often the best way to grab the attention of distracted shoppers. You might try out different personalization options, such as basing the image content on the category of the abandoned product.

- Call to action: Great email campaigns fall down if the call-to-action doesn’t compel customers to click through. The position, language and appearance of the call-to-action can all impact the click-through rate.

- Inclusion of dynamic content Like product recommendations, countdown timers, and product ratings. This content is generated at open time to make emails more personal and compelling. Use A/B tests to measure how customers respond to different types of messaging.

But triggered emails like browse and cart abandonment alerts have some additional aspects you might want to test.

- The delay before each email in a program: For shopping recovery emails, we normally recommend no more than a 30 minute delay before sending the first message. But this can vary depending on the nature of your products and your customers’ buying habits. So it’s worth testing if you think you’re not getting the best results. For multi-step campaigns, you can also test the delays between further emails in the sequence.

- Number of triggered emails sent: Marketers often worry that sending more than one email will antagonize customers. In fact, multi-step campaigns can lead to a sales uplift 21% more than single-step triggers. A well-timed second or third reminder re-engages customers who were distracted from making a purchase.

- Cart layout (how product details are displayed): The heart of a cart or browse abandonment email is the reconstruction of the abandoned product. We recommend including an image of the abandoned product(s), a clear call to action, and some brief product information.

Measuring A/B test success

To measure success, you’ll start out with a hypothesis involving the element you’ll be testing, and the metric you expect to improve. For example: Including product recommendations in the email body will result in a higher click through rate.

To prove or disprove the hypothesis, you’ll then need to run the test on a big enough sample size to know that the result is part of a pattern, and not the result of chance.

Remember that if the test doesn’t generate the result you expected, it’s not a failure. You’ve just shown that one tactic isn’t as effective as you thought. Now, you can move on to focus on tactics that get results.

If your A/B test results don’t seem to hold up in the long run, check this breakdown of what could be going wrong – and how to fix it.

2. A/B/n testing

This is an extension of A/B testing. Rather than testing two versions of an email program (A and B) against each other, you’re testing more than two versions of the same email. ‘N’ simply means the number of variations.

How to use A/B/n tests

A/B/n testing can be used to test a bigger range of variations than A/B tests, and to reduce testing time by coming to a faster conclusion. You can use A/B/n tests when you want to optimize one area of your email but don’t have a strong hypothesis about what content will work best.

For instance, imagine you want to increase your conversion rate from wish list abandonment emails.

You’ve already established that customers respond well to product recommendations in wish list emails, but you want to optimize the mix of recommendations.

You could test different combinations of recently browsed products, crowdsourced recommendations, and most popular products in the shoppers’ preferred category and see which ones have the highest conversion rates.

Measuring A/B/n test success

It may seem intuitive to proclaim the version with the highest uplift as the winner.

But for a true winner to be declared, you need to look at the difference between the top-performer and the runners up.

Even if the difference between the winning version and the control version is significant, the difference between winner and the other variations might not be.

The risk here is that you deploy the top-performing variation, writing off other variations that could have similar – or better – results.

The solution is to view A/B/n tests as multiple variations of content competing against one another, not simply competing against the control version.

If the leading two variants don’t have significantly different results, you could run an A/B test of those two versions. Alternatively, you could view the test as a tie and look to other considerations to decide which version to implement.

3. Control groups

Control groups help you work out how one marketing channel or piece of content is contributing to engagement and sales. They are based on the “removal effect” principle – you can see the value of a particular marketing channel by removing it from the customer journey and measuring the impact on sales.

How to use control groups

Use control groups when you want to work out the contribution of a triggered email campaign to your overall conversions and sales uplift.

In a control group test, you select a percentage of customers to be excluded from a specific type of marketing, such as triggered emails. The activity of these customers is compared against those who were exposed to the program.

The difference between the control group and the exposed group gives you a good idea of how triggered emails impact conversion rates and sales uplift.

Measuring email success with control groups

It might seem scary at first to exclude some customers from email marketing efforts. Brands might be put off by the possibility of losing even a small percentage of revenue.

But control groups are one of the best ways to prove the value of your marketing channel, so you can continue to invest in it going forward.

Marketers can set the percentage of visitors in the control group to be proportionate to the overall number of visitors. If you send a large volume of cart abandonment emails on a daily basis, then including a very small percentage of customers in the control group will be sufficient.

Getting started with triggered email optimization

Triggered emails can be a real “quick win” for your business. They instantly open up new opportunities to communicate with customers and recover lost revenue.

But to make the most of these opportunities, it’s vital to regularly test and optimize campaigns. By understanding how each testing method contributes to overall marketing strategy, you’ll be able to:

- Optimize trigger campaigns based on customer behavior.

- Prove the value of email marketing tactics.

- Know which channels and tactics to prioritize.

For more insights on how drive more email revenue, download our eBook: 6 ways to drive more email revenue.