Triggered emails are key to A LOT of online brands and retailers. Especially when it comes to delivering helpful marketing that maintains engagement with customers, keeping your brand front of mind and, in most cases, driving customers to convert or complete a purchase.

But what are they?

Triggered emails are automated emails, sent based on customers’ behavior. They help businesses deliver personalized, real-time content to shoppers at the moment they are most likely to convert.

Triggered emails versus BAU

Triggered emails are not the same as segmented or business as usual emails. Since triggered emails are usually triggered by an individual’s activity, they are not sent as part of a scheduled send – so their content is likely to be very topical and timely.

Types of triggered emails

Here are 5 popular types of triggered emails.

1) Cart and browse abandonment emails

These are the best “quick wins” for triggered messaging within marketing automation. Cart abandonment is when a shopper adds an item to their cart but leaves the site before completing their purchase, while browse abandonment is when a shopper abandons a browsing session without taking any further actions on the site.

These shoppers are sent a personalized reminder email – including details of the items they carted or browsed – encouraging them to visit the website again.

This means more customers will complete the purchase on your site, rather than starting again with a Google search for the product they found, and possibly buying from your competitor.

The average Fresh Relevance client sees a 13% sales uplift with cart and browse abandonment emails.

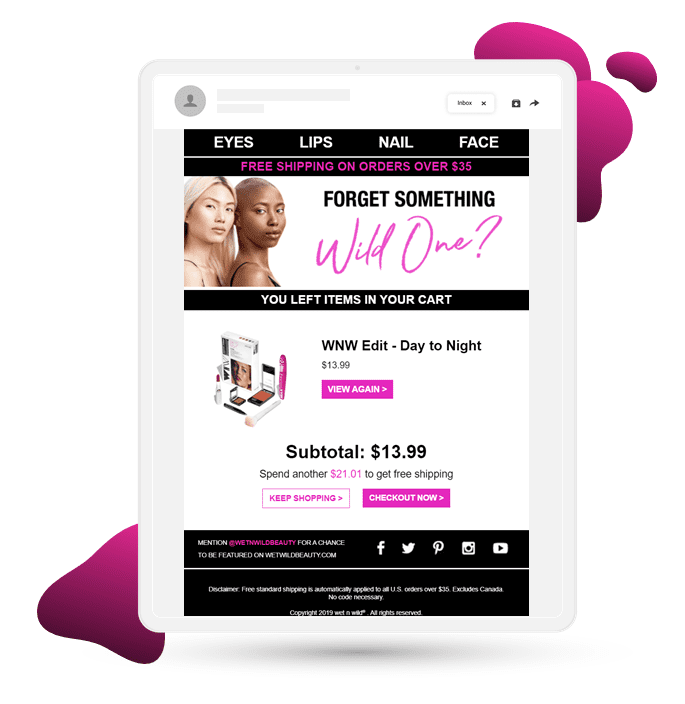

Here’s a cart abandonment email example from Markwins Beauty Brands. The cosmetics retailer follows best practice by including details of the items that the shopper abandoned. They also highlight the free shipping spending threshold, helping to increase the AOV.

2) Account creation emails

These types of triggered emails can be a great way for customers to track their order history, and a welcome email or transactional email can make for an easier checkout experience.

3) Back in stock notification

Back in stock alerts target shoppers who have browsed out-of-stock products which are now back-in-stock. This type of email within your digital marketing campaign helps turn a potentially frustrating customer experience into a positive one.

4) Post-purchase program

This is a sequence of emails within your email marketing campaign that are triggered after a shopper makes a purchase, providing useful content related to their recent purchase and related product recommendations.

5) Replenishment emails

These emails are triggered after the purchase of consumable products (such as vitamins and pet food) and sent around the time that a typical customer might need to re-order to boost customer retention.

Other examples

Other triggered emails include:

- Complete your profile reminder

- Booking/order confirmation

- Coupon/discount incentive to buy again

Why are triggered emails important?

There are several benefits to using triggered emails, let’s dive right in.

Improved customer experience

When done right, triggered emails can enhance the customer experience. This is because each email is sent at the right time, based on the customer’s stage in their lifecycle and their behavior.

Increased CTR and revenue

Triggered emails have higher click through rates (CTR) than BAU emails. They also generate more revenue. This is because these messages are often sent to high intent customers when they are most likely to make a purchase. The email shows them relevant content that can give them the final nudge to convert.

Customer retention & loyalty

Automated emails can be very effective in increasing customer retention and loyalty. By collecting data about customer behavior, you can detect when customers are at risk of churning. By using replenishment, back-in-stock or price drop emails, for example, you can convince customers to continue buying from you.

Increased efficiency

As triggered emails are automated, you can easily scale this tactic and reap the rewards – which would be a sheer impossible task if done manually. Simply set up the email content and rules, and the emails will be automatically sent for you.

Triggered emails best practices

There are several best practices when it comes to setting up your triggered emails.

Personalize, personalize, personalize

If you’re sending out triggered emails, chances are that you have tons of customer data at hand that you can use to personalize your email marketing. With personalization, we don’t just mean name personalization. You could also include personalized dynamic content and product recommendations based on browse and purchase behavior.

This will increase your CTR, conversion rate and AOV.

The importance of your call-to-action

Each triggered email should include a clear CTA. Otherwise, customers might look at your email and not perform the desired action, and you’ll miss your chance for conversions.

Keep it clear & concise

Make messaging in your triggered emails clear and concise. You don’t want super long emails, as customers likely won’t take the time to read the whole thing and might miss the action you want them to take. Focus on the reason for the email, and base your content around that.

Avoid sending too many emails

No matter what type of triggered email functionality you’re using or for what purpose, there is always one common denominator as to why they’re being deployed – customer to brand interaction: you want your (potential) customers to engage with you repeatedly. However, there’s a fine balance between sending helpful, informative communications and oversending when using marketing automation.

Be mindful of not only the content and timeliness of your emails, but also the volume of emails you’re sending them.

Ensure your timing is right

Triggered emails work best when sent at the right time. This doesn’t mean that the timing is the same for each company. In fact, each business will have a different ‘sweet spot’ for their emails. Which leads us to our final point…

Test & optimize

Don’t set and forget. You should test and optimize your triggered emails regularly – the timing(as mentioned above), frequency, and the contents. Which product recommendations work best? What color should the CTA be? Are three cart abandonment emails better than two? These are all questions you should be asking yourself – and testing to find out the answers.

How to avoid sending too many triggered emails

How many emails could a customer receive from your brand in a given week? Is it 4? 5? Maybe more? Do all customers need to be included in all comms? Should you exclude ‘active’ customers from generic bulk activity?

All of this is made easier to control and define in Fresh Relevance by using customer data through a variety of tools:

- Remarketing suppression timeframes – by default we don’t allow sending of more than one email every 24hrs to customers (this setting can be changed)

- Use of marketing rules to add a hierarchy to your triggered emails, for example ‘has not received a Cart Abandon email in the last week’

- Importing or using segments to have the ability to include or exclude customers from receiving certain triggered emails

Trying to drive those all-important interactions with customers can prove fruitless if you just ‘add to the noise’ or end up in their spam folder because you haven’t followed marketing suppression best practice (one marketing email a day is enough).

Triggered email optimization

With Fresh Relevance you can test optimal email timings, number of emails, and email content with our Optimize Center.

Here are some of our top considerations for getting your triggered emails right:

Basic optimizations

Wait time

One of the first things you can optimize for triggered emails (such as cart and browse abandonment emails) is timing. In some instances with clients, we’ve seen that a shorter wait time actually increases conversions and sales uplift. On the other hand, clients who sell higher consideration products, such as jewelry, can benefit from longer wait times.

Popular vacation rentals and holiday resorts business Awaze tested deployment times of browse abandonment emails for their cottages.com and Hoseasons brands, comparing the difference in performance between sending the first email 15 minutes, 30 minutes or 60 minutes after abandonment. After one month of testing they found that browse abandonment emails sent 15 minutes after abandonment yield the best conversion rates.

Replicating your trigger programs and then adjusting the wait times to test against one another will really help you find the sweet spot for your email cadences.

> Test Stage 1 wait time: 20mins vs. 30mins or 30mins vs. 60mins

> Test Stage 2 wait time: 24hrs vs. 36hrs or 24hrs vs. 48hrs

Number of stages

Some shoppers want to sleep on a purchase, especially if it’s an expensive or one-off buy. Others simply get distracted or are still shopping around when that first abandonment email arrives. That’s why we recommend testing the effectiveness of multi-stage triggered email campaigns. In fact, our clients see an average sales uplift of 21% by adding multiple stages to their triggered abandonment emails.

> Test number of stages: 1 email vs. 2 emails or 2 emails vs. 3 emails

> Test coupon/discount at different stages: Stage 1, Stage 2 or Stage 3

Getting Personal offers a good example of a successful multi-stage triggered email campaign. The company has seen a 54% sales uplift with their multi-stage browse abandonment email campaign.

Look and feel

The look and feel of an email is important; a visually unappealing email is less likely to entice recipients to click through to your website or read all of the content.

Try testing different versions of the same element, such as the copy, content, images or the messaging, size and color of your CTA.

Next level optimizations

Content displayed at each stage

Once you’ve got your multi-stage triggered email campaigns up and running, you can start to optimize the content you display in each email. You can test the efficacy of discounts at different stages – some retailers wait until the second or third email to provide a discount, for example. Other areas could include testing at which stage testimonials and social proof are most effective.

Product recommendations

Product recommendations are a great addition to triggered emails, enabling you to showcase more of your stock and help shoppers find the perfect product for them. Vapouriz added product recommendations to their cart abandonment emails, recommending items shoppers can add to their cart to meet the free delivery threshold. This has helped them achieve a 10% increase in AOV from cart abandonment emails.

Additionally, you can test which types of product recommendations work best, for example, similar products vs products tailored to the individual’s browsing history.

Banners

Personalized banners are a great way to engage customers and increase CTR. Getting Personal has seen success with banners that are personalized with the customer’s name. The company tested the effects of this dynamic name personalization on their emails with a series of A/B tests and found that personalized banners increased their click-through rate by 15%, their conversion rate by 7% and sales by 37%.

You could also try testing the effects of banners with category or product personalization.

Subject line personalization

Including the recipient’s name or the name of the product they’re interested in can be an effective way to stand out in a crowded inbox and boost open rates and click rates, as customers know what to expect from the email.

Try testing product personalization, name personalization or a combination of both in one subject line.

Advanced optimizations

Fixed delay wait times

Dynamic wait times aren’t ideal for every business. If you’re a B2B company, for example, a cart abandonment email that gets triggered in the evening is more likely to sink into your recipient’s inbox overnight.

With Fresh Relevance, you can manipulate the send time dynamically and test the effectiveness of dynamic vs fixed wait times. For example, you could test a dynamic 20 minute delay vs a 20 minute delay during office hours with a pause during out of office hours.

Advanced subject line personalization

The more tailored your subject line is, the better you can set expectations for your recipients. But with so many options, it’s worth taking this optimization further. With Fresh Relevance, you can assess whether your subject line personalization performs better at a category, product or even SKU level. You can also look at whether naming one product is enough or if listing all the products the shopper has carted or browsed is more effective.

Cart layout optimization

How the products in your cart abandonment emails are displayed can have a huge impact on customer interaction. Fresh Relevance can help you test layouts and get the right balance of information to display, for example by testing large vs small product images or different social proof indicators.

Audience targeting

Who you target for your abandonment campaigns is an important consideration. Should you target your whole customer base or just those with higher intent? With Fresh Relevance, you can test out different criteria to only contact those who are most relevant and save attention for BAU campaigns for others with lower intent. For your browse abandonment emails, for example, you could test the difference between sending to those who have visited your website more than twice in 7 days vs all visitors.

One last thing to bear in mind when looking to test and trial different hypotheses is to make sure you keep the variables to a minimum so you can truly gauge what’s working best.

Also, remember that this isn’t an exact science and there isn’t a ‘one size fits all’ approach – different customers, segments, brands and messaging will often produce quite different results.

Final thoughts

You can learn more about getting started with triggered emails and finding the right technology to help you succeed with our Triggered Email Buyer’s Guide.